Course Description

This course will help you understand how Hadoop, Spark and its eco-system solves storage and processing of large data sets in a distributed environment.

Building Data Pipelines using Cloud

The Big Data Architect Masters Program is designed for professionals who are seeking to deepen their knowledge in the field of Big Data. The program is customized based on current industry standards and designed to help you gain end to end coverage of Big Data technologies. The program is curated by industry experts to provide hands-on training with tools that are used widely in industries across Big Data domain.

The Big Data Architect Masters Program is designed to empower professionals to develop relevant competencies and accelerate their career progression in Big Data technologies through complete Hands-on training.

Being a Big Data Developer requires you to learn multiple technologies, and this program will ensure you to become an industry-ready Big Data Architect who can provide solutions to Big Data projects.

At NPN Training we believe in the philosophy “Learn by doing” hence we provide complete Hands-on training with a real time project development.

Most Meticulously designed Data Engineering program

This course will help you understand how Hadoop, Spark and its eco-system solves storage and processing of large data sets in a distributed environment.

In this course, you will learn one of the most popular enterprise messaging and streaming platform, you will learn the basics of creating an event-driven system using Apache Kafka and Spark Structured Streaming and the ecosystem around it.

1 Capstone Project

Amazon Web Services (AWS) offer a unique opportunity to build out scalable, robust, and highly-available systems in the cloud. This course will give you an overview of all of the different services that you can leverage within AWS to build out a Big Data solution.

In this course, you will learn how to harness the power of Apache Spark and powerful clusters running on the Azure Databricks platform to run large data engineering workloads in the cloud.

This course will provide you to additional tools and framework to become a successful data engineer.

Tried & tested curriculum to make you a solid Data Engineer

Course description: This course will help you to learn one of the most powerful, In memory cluster computing framework.

Data Engineering Concepts and Hadoop 2.x (YARN)

1 Quiz

Learning Objectives – This module introduces you to the core concepts, processes, and tools you need to know in order to get a foundational knowledge of data engineering. You will gain an understanding of the modern data ecosystem and the role Data Engineers, Data Scientists, and Data Analysts play in this ecosystem.

Topics –

Hadoop Commands and Configurations

1 Quiz 1 Assignment

Learning Objectives – In this module, you will learn different commands to work with HDFS File System, YARN Commands and how to execute and monitor jobs.

Topics –

Structured Data Analysis using Hive

1 Quiz 1 Assignment

Learning Objectives – In this module, you will understand Hive concepts, Hive Data types, loading and querying data in Hive, running hive scripts and Hive UDF.

Topics –

Getting Started with Apache Spark + RDD’s

1 Quiz 2 Assignments

Learning Objectives – In this module, you will learn about spark architecture in comparison with Hadoop Ecosystem and you will learn one of the fundamental building blocks of Spark – RDDs and related manipulations for implementing business logic (Transformations, Actions and Functions performed on RDD).

Topics –

Exploring Spark SQL and DataFrame API

1 Quiz 1 Assignment

Learning Objectives – In this module, you will learn about Spark SQL which is used to process structured data with SQL queries. You will learn about DataFrames and Datasets in Spark SQL and perform SQL operations on DataFrames.

Topics –

Deep Dive Dive DataFrame API

1 Quiz 1 Assignment

Learning Objectives – In this module, you will learn some of the advance concepts of DataFrame API

Topics –

Packaging, Deploying and Debugging

1 Quiz

Learning Objectives –In this module, you will learn what are the different aspects to take care to deploy and improve Spark applications.

Topics –

Best Practices and Performance Tuning

1 Quiz

Learning Objectives –In this module, you will learn what are the different aspects to take care to deploy and improve Spark applications.

Topics –

Course description: Apache Kafka is a popular tool used in many big data analytics projects to get data from other systems into big data system. Through this course students can develop Apache Kafka applications that send and receive data from Kafka clusters. By the end of this course, you will be able to set up a personal Kafka development environment, master the concepts of topics, partitions and consumer groups, develop a Kafka producer to send messages and develop a Kafka consumer to receive messages. You will also practice the Kafka command line interfaces for producing and consuming messages.

Module 01 – Getting Started with Kafka and Core API’s

1 Quiz 1 Assignment

Learning Objectives – In this module, you will understand Kafka and Kafka Architecture.

Topics –

Deep Dive Kafka Producer and Consumer API

1 Quiz 1 Assignment

Learning Objectives – In this module, you will understand you will learn advance Kafka Core API i.e Producer API, Consumer API, Kafka Connect

Topics –

Streaming Data with Spark Structured Streaming

1 Quiz 1 Assignment

Learning Objectives – In this module, you will understand you will learn advance Kafka Core API i.e Producer API, Consumer API, Kafka Connect

Topics –

Advance Stream Processing

1 Quiz 1 Assignment

Learning Objectives – In this module, you will learn advance Stream Processing concepts

Topics –

Course description: This course will help you to learn one of the most powerful, In memory cluster computing framework.

Getting Started with AWS, IAM and S3 Storage

1 Quiz

Learning Objectives – In this module, you will learn the fundamentals of AWS, IAM and S3 Storage.

Topics –

Big Data Processing with EC2 and EMR

1 Quiz 1 Assignments

Learning Objectives – In this module, you will understand Amazon EMR Instance and how to launch spark cluster.

Topics –

Streaming and Funneling Data with AWS Amazon Kinesis

1 Quiz 1 Assignment

Learning Objectives – In this course you learn to harness the power of Real-time streaming using Kinesis family of services Kinesis Data Streams (KDS), Kinesis Data Firehose (KDF) and Kinesis Data Analytics (KDA) to construct high-throughput, low latency, pipelines of data across a variety of architectural components leading to scalable and loosely coupled systems.

Topics –

Building ETL Data Pipeline using AWS Glue & Athena

1 Quiz 1 Assignment

Learning Objectives – In this course you learn to harness the power of Real-time streaming using Kinesis family of services Kinesis Data Streams (KDS), Kinesis Data Firehose (KDF) and Kinesis Data Analytics (KDA) to construct high-throughput, low latency, pipelines of data across a variety of architectural components leading to scalable and loosely coupled systems.

Topics –

Serverless Architecture using AWS Lambda

1 Quiz 1 Assignment

Learning Objectives – In this module, you will learn how to use Amazon’s S3 AWS SDK Java API to work with buckets.

Topics –

Automate AWS Infrastructure

1 Quiz 1 Assignment

Funneling Data with Kinesis Firehose + Kinesis Analytics

3 Hours 2 Assignment

Learning Objectives – In this module, you will learn how to use Amazon’s Kinesis Firehose and Data Analytics.

Topics –

Course description: In this course you will learn one of the most popular web-based notebook which enables interactive data analytics.

Exploring Azure Databricks

3 Hours 2 Assignments

Learning Objectives –

Topics –

Accessing Data from Azure Data Lake Storage

3 Hours 2 Assignments

Learning Objectives –

Topics –

Exploring Databricks Platform

3 Hours 2 Assignments

Learning Objectives – In this module, you will understand Databricks platform

Topics –

Course description: In this course you will learn one of the most popular web-based notebook which enables interactive data analytics.

Exploring Azure Databricks

3 Hours 2 Assignments

Learning Objectives –

Topics –

Accessing Data from Azure Data Lake Storage

3 Hours 2 Assignments

Learning Objectives –

Topics –

Exploring Databricks Platform

3 Hours 2 Assignments

Learning Objectives – In this module, you will understand Databricks platform

Topics –

We follow assessment and project based approach to make your learning maximized. For each of the module there will be multiple

Assessment/Problem Statements.

You will have quiz for each of the modules covered in the previous class/week. These tests are usually for 15-20 minute duration.

Each candidate will be given a exercise for evaluation and candidate has to solve.

You will be assigned computational and theoretical homework assignments to be completed

Coding hackathon will be conducted during the middle of the course. This is conducted to test application of concepts to the given problem of statement with tools and techniques that have been covered and to solve a problem quickly, accurately.

At the end of each course there will be a Real-world Capstone Project that enables you to build and end-to-end solution to a real world problems. You will be required to write a project report and present to the audience.

110 hours extensive class room

training.

36 sessions of 3 hours each. Course Duration : 4Months

For each of the module multiple Hands-on exercises, assignments and quiz are provided in Google Classroom

We have a community forum for all our students wherein you can enrich your learning through peer interaction.

On completion of the project NPN Training certifies you as a “ Big Data Architect ” based on the project.

Interview Preparation Kit

We solemnly swear to always tell you why your device is up to no good. No vague problem definitions – we’ll tell you the exact issue, and if applicable, which part is faulty before we proceed to fixing the problem.

Interview Preparation Kit

We solemnly swear to always tell you why your device is up to no good. No vague problem definitions – we’ll tell you the exact issue, and if applicable, which part is faulty before we proceed to fixing the problem.

Interview Preparation Kit

We solemnly swear to always tell you why your device is up to no good. No vague problem definitions – we’ll tell you the exact issue, and if applicable, which part is faulty before we proceed to fixing the problem.

This program (Big Data Architect Masters Program) comes with a portfolio of industry-relevant POC’s, Use cases and project work.

Unlike other institutes we don’t say use cases as a project, we clearly distinguish between use case and Project.

This project will generate dynamic mock data based on the schema at a real-time, which can be further used for Real-time Processing systems like Apache Storm or Spark Streaming.

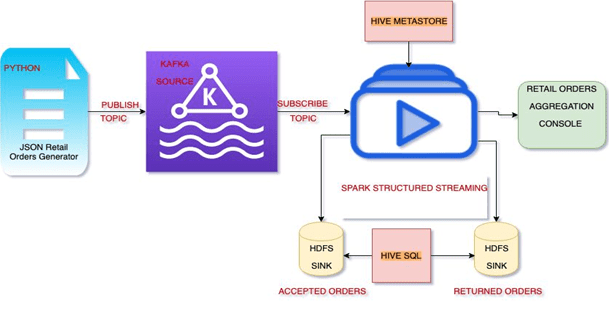

The purpose of the project is to subscribe to KAFKA topic from spark structured streaming read stream API and the JSON records are generated automatically using the python retail data generator script. We use HDFS sink of CSV format to write the accepted orders and rejected orders to different location in HDFS and the aggregation of orders like average amount and the count of order Quantity is written to the console. The hive tables orders and orders reject is used to query the accepted and rejected retails orders.

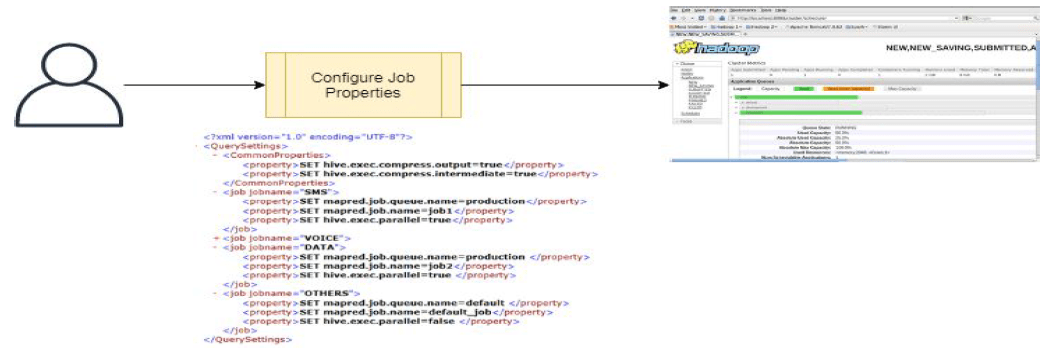

The purpose of this project is to dynamically allocate resources for a Hive job at run time. The job details are present in a XML file read during execution. Based on the job name present in the XML file, the business logic lookup a XML file with the matching job name and assign dynamically the hive job a Queue in capacity scheduler , set multiple resource values and start running the job and the job execution status is visible in Hadoop resource manager Web UI.

1. You have to transfer Rs.1000 towards the registration amount to the below mentioned account details

2. Send screen shot of the payment to info@www.npntraining.com with subject as “Big Data Data Masters Program Pre Registration

3. Once we receive payment , we will be acknowledging the payment through our official email id..

Account Details

| Name: | Naveen P.N |

| Bank Name | State Bank Of India |

| Account No | 64214275988 |

| Account Type | Current Account |

| IFSC Code | SBIN0040938 |

| Bank Branch | Ramanjaneya Nagar |

Send screen shot to : info@www.npntraining.com

Email Subject: Big Data Masters Program Pre Registration

Registration Fees: Rs.1000

Note : Check for the batch availability with Naveen sir before doing the pre-registration.

Sorry

Big Data Architect Learning track has been curated after thorough research and recommendations from industry experts. It will help you differentiate yourself with multi-platform fluency, and have real-world experience with the most important tools and platforms.

All the Big Data classes will be driven by Naveen sir who is a working professional with more than 12 years of experience in IT as well as teaching.

Yes, You can sit in actual live class and experience the quality of training.

The practical experience here at NPN Training will be worth and different than that of other training Institutes in Bangalore. Practical knowledge of Big Data can be experienced through our virtual software of Big Data get installed in your machine.

The detailed installation guides is provided in the E-Learning for setting up the environment.

NPN Training will provide students with all the course material in hard copies. However, students should carry their individual laptops for the program. Please find the minimum configuration required:

Windows 7 / Mac OS

8 GB RAM is highly preferred

100 GB HDD

64 bit OS

The course validity will be one year so that you can attend the missed session in another batches.

Once you have registered and paid for the course, you will have 24/7 access to the E-Learning content.

The total fees you will be paying in 2 installments

Yes, we have group discount options for our training programs. Contact us using the Live Chat link. Our customer service representatives will give you more details.

Trustindex verifies that the original source of the review is Google. I did big data master program Well knowledged teacher answer to the question structured course highly practical training individual care follow up on every one .Naveen is living example for all I said when you finish other course u will have thorough knowledge only one thing need from us is interest and follow what he is saying good course and great masterTrustindex verifies that the original source of the review is Google. I joined NPN Training back in July 2021 for Big data Engineer training. Naveen has been the best technical trainer I have come across in my entire career. He teaches topics from scratch, covers every problem hands on and doesn't rest until any issue encountered is resolved. He also provides interview related guidance which is a huge boost for people who are not working directly in big data. Highly recommended for people who are looking to switch to big data but don't have the correct guidance to follow.Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Trustindex verifies that the original source of the review is Google. Load more

In this blog post , you will learn different ways to handle Nulls in Apache Spark..

In this blog post I will explain different HDFS commands to access HDFS..

In this blog post we will learn how to convert RDD to DataFrame with spark..

WhatsApp us